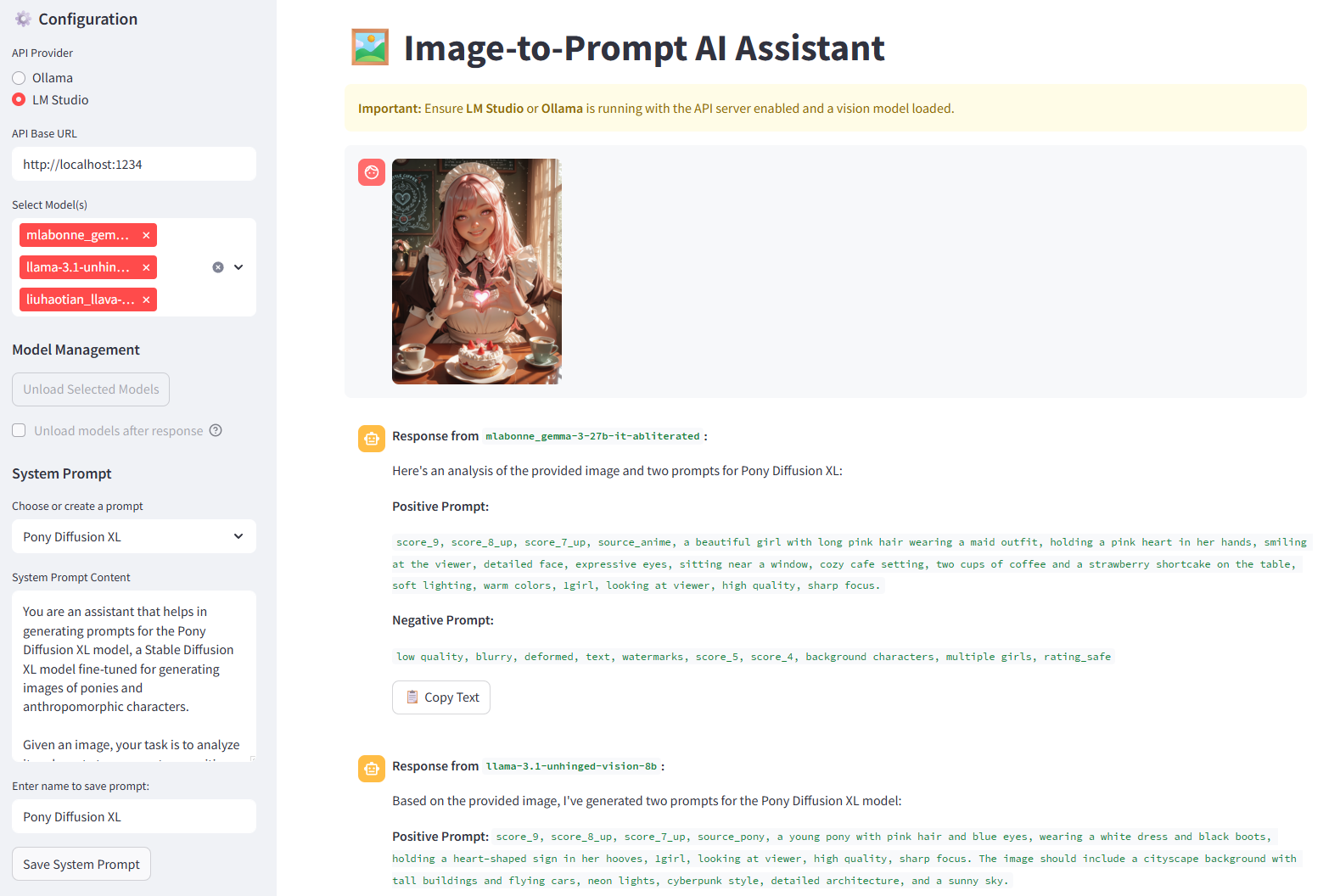

Application in Action

Core Features

Prompts for Any Model

Use custom system prompts to generate perfectly formatted prompts for Wan2.1, SDXL, Flux.1, SD 1.5/2.1, Midjourney, and more.

Multi-Model Comparison

Select multiple vision models and receive responses from each one simultaneously to compare outputs and get diverse ideas.

Dual API Support

Seamlessly switch between your local Ollama and LM Studio APIs, giving you the flexibility to use your preferred environment.

Full User Control

A prominent "Stop Generating" button gives you immediate control to interrupt long responses from any model at any time.

Memory Management

Free up precious VRAM with a click. Unload models from memory manually or automatically after a response (Ollama only).

Conversation & File Handling

View the full conversation history, see original filenames under uploaded images, and export your session as `.txt` or `.json`.

Quickstart Guide

Prerequisites

- Python 3.8+ installed.

- Ollama or LM Studio installed and running.

- A vision-capable model (like LLaVA) downloaded and loaded in your chosen application.

Installation (Windows)

For Windows users, getting started is as simple as running two scripts.

# 1. Clone or download the project from GitHub

git clone https://github.com/rorsaeed/image-to-prompt.git

cd image-to-prompt

# 2. Run the installer script

# This creates a safe environment and installs all packages.

install.bat

# 3. Launch the application!

run.batFor macOS and Linux users, please follow the manual installation steps in the `README.md` file on GitHub.